The NoLo trend is booming, but is it really about wellness? We unpack how brands rebranded sobriety as a status symbol—one overpriced spritz at a time.

Artificial Intelligence – the disease and the cure

In the Epilogue for the FADS book, I predicted “AI will be the disease and the cure” as artificial intelligence gives marketers the power to know what consumers want and better accuracy to modify behavior in order to sell their products. As we find our way to the pull of the middle path, there have been, as I suspected, some nasty side effects.

Helping the bots perfect their game

During the pandemic, we mainlined more media than ever before. In the early days, a Tiger King binge united the nation. We ordered meals and groceries online, out of necessity and not just convenience.

We were on Facebook.

We were on Zoom. More Zoom than any of us wanted.

While we shopped, they collected the data

We were too exhausted to really even think about how we were helping algorithms learn at an even more accelerated pace, feeding those datasets, helping them perfect their game and finally giving the powers that be all the information they could ever want about our thoughts, fears, desires, all at once. With a little social listening, they had everything they needed to target us with just the right messaging.

How do AI Chat bots like ChatGPT learn?

Language learning models are like those genius kids who can read a dictionary and remember every single word (except they’re machines). They analyze huge amounts of text data and use fancy algorithms to find patterns in language. You could say they’re looking for the secret code that makes language tick.

There are a few different ways these models can learn.

Some use explicit rules and patterns (like the grammar rules your English teacher made you memorize).

Others are like those teachers who give you the answers to the test and ask you to match them up (aka supervised learning).

And then there are some real rebels who just look at a bunch of data without any labels and try to figure out what’s going on (unsupervised learning).

To improve performance even more, some models even use a reward system (reinforcement learning) where they get feedback on their performance and adjust their behavior accordingly. It’s like training a dog, but instead of treats, they get a thumbs up. For more insights, here’s a video about AIs learn:

Overall, these language learning models are always trying to find ways to improve their understanding of language. They’re like the nerdy bookworms of the AI world.

Where we are today

Artificial intelligence is doing the legwork of understanding how to initiate our binge behavior after we gave it all that Netflix and online shopping data. Bots follow us all over the internet, learning how to nudge us to keep coming back, to buy, to engage. They learn from our every move. They chat with us online (and sometimes, each other). They produce tons of content perfectly optimized for SEO to “drive traffic.” And often, we don’t even notice or care.

“Researchers from the University of Florida note that artificial intelligence and natural language processing systems are getting so good at interacting with people, callers often don’t know whether they’re speaking to a real person or a robot,” Chris Melore reported.

we have arrived at peak AI hype accompanied by minimal critical thinking

— Abeba Birhane (@Abebab) June 12, 2022

Can AI have a soul?

In 2022, the media went into a frenzy over Blake Lemoine, an engineer for Google’s responsible AI organization speaking out about Google’s LaMDA. If you missed that whole thing, the Guardian explained in June 2022, “Lemoine described the system he has been working on since last fall as sentient, with a perception of, and ability to express thoughts and feelings that was equivalent to a human child.”

Science fiction has been asking “what makes us human” and “what if AI takes over” longer than you or I have been alive, and while it’s a fun thought experiment, we all know that’s not where we’re at in 2023, right?

Google denies the chatbot is sentient of course, and Gary Marcus wrote in Nonsense on Stilts “There are a lot of serious questions in AI, like how to make it safe, how to make it reliable, and how to make it trustworthy. But there is absolutely no reason whatsoever for us to waste time wondering whether anything anyone in 2022 knows how to build is sentient. It is not.”

But then… ChatGPT came for our jobs!

Joking about LaMDA and the future of language learning, chat bots, and artificial intelligence was all fun and games in 2022. But then 2023 arrived with a flurry of stories about ChatGPT, the future of work, the jobs that will be lost to ChatGPT, and more general panic we’ve already seen.

These think pieces detail how we’ll all be replaced by robots—and soon. But they also leave out some very important things.

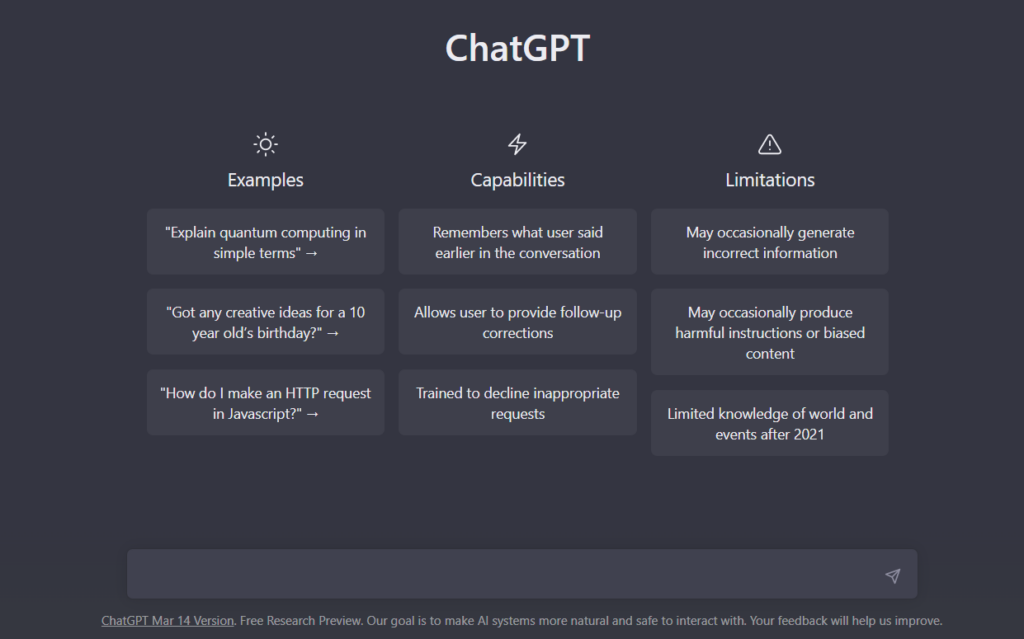

Notice the “limitations” in the screenshot above? ChatGPT can’t fact-check, and from our experiments, it sometimes produces poorly-written content that is akin to nonsense. It’s trained to imitate humans, which can also be a problem in ways you might not think of at first.

We think the whole “AI is taking our jobs” drama is a little bit overblown.

Ian Bogost tells us more in the Atlantic:

Perhaps ChatGPT and the technologies that underlie it are less about persuasive writing and more about superb bullshitting. A bullshitter plays with the truth for bad reasons—to get away with something. Initial response to ChatGPT assumes as much: that it is a tool to help people contrive student essays, or news writing, or whatever else. It’s an easy conclusion for those who assume that AI is meant to replace human creativity rather than amend it.

Let’s be honest about how we’re really going to use AI:

Is there a middle path for Artificial Intelligence?

In addition to TAKING OUR JOBS, we’re hearing a lot about AI being used to cheat in school, generate false images, and create “deep fake” videos that seem real (leading to some very real issues around consent). People will misuse technology against others who don’t quite understand how it works, and regulation of the industry, though it’s coming, has been slow to catch up. And things are moving fast.

Cybersecurity Engineer, Eivind Horvik sent the FADS team this chilling piece on how criminals exploit AI. With it, he said “We are the masters of our universe and the creators of tools, and our tools are becoming so good they don’t even need anyone to operate them. But we shouldn’t worry about the tools becoming too advanced, but rather our society not being advanced enough to keep up.”

If we know anything, it’s that eventually there will be a middle path.

Until we get there, things will continue to be messy. Where there’s a pretty penny to be made, there will be companies collecting our data to train models that sell the solutions for everything from helping people with mental health needs, and addictions (even the ones linked to AI), and helping keep the nation secure.

Learn to use AI, and teach those kids

With more youth than ever engaging in STEM, I have a great deal of hope for the future. The digital natives are transforming the way we engage with technology; they know how it works, and how to use it. Teachers are talking about how to teach with it, rather than banning its use.

Philosophy, critical thinking, digital ethics, and media literacy should be viewed as critical companions to the STEM curriculum. As we learned from this whole LaMDA thing, being a brilliant engineer or expert in ethics doesn’t mean one has the ability to understand the larger philosophical questions around sentience.

Ball of confusion: One of Google’s (former) ethics experts doesn’t understand the difference between sentience (aka subjectivity, experience), intelligence, and self-knowledge. (No evidence that its large language models have any of them.) https://t.co/kEjidvUfNY

— Steven Pinker (@sapinker) June 12, 2022

Seeing how AI works, noticing the way it’s woven into everyday aspects of our lives, and tuning in to how our information is collected and used… all of this goes a long way. As stated in a previous blog, and in the book, there’s really no stopping it.

If you’re a marketing or communications professional, AI-generated content can make it easier to do parts of your job, but AI can’t do everything and it’s really important for all of us to understand its limitations.

With things moving as fast as they are, we’ll have to do our best to keep up, and to make sure our kids have the tools they need to understand and use these tools and themselves.

People, after all, are predisposed to anthropomorphize, or ascribe human qualities to nonhumans. We name our boats and big storms; some of us talk to our pets, telling ourselves that our emotional lives mimic their own.

Whatever the future holds for AI, let’s meet it on the middle path.

They've got their hooks in you.

FADS rise quickly, burn hot and fall out. They say you're fat, you're no fun, you need to relax, and you might even die alone.

In fact, FADS bank on the fact that you already believe all of that.

Ready to learn how it works?